Big data planning/deployment is hard. What is equally hard and some might say even harder is doing big data QA/testing. There are many questions that need to answered before taking the first steps in coming up with a test plan. Shortcomings in testing can result in poor data quality, poor performance and less than stellar user experience. Big data Hadoop implementation deals with writing complex Pig, Hive programs and running these jobs using Hadoop map reduce framework on huge volumes of data across different nodes.

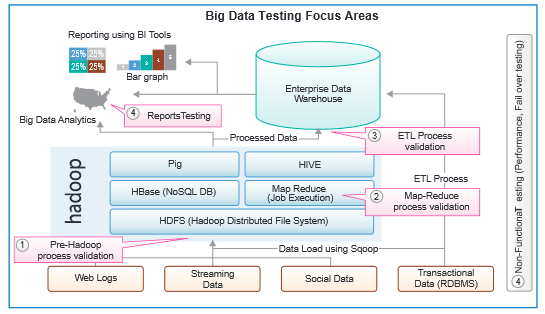

Use the following figure as a quick guide to zero in on points along the data flow path to target for quality assurance.

Here are 5 quick steps to get your testing efforts on the right path -

Use the following figure as a quick guide to zero in on points along the data flow path to target for quality assurance.

Here are 5 quick steps to get your testing efforts on the right path -

- Document the high level cloud test infrastructure design (Disk space, RAM

required for each node, etc.)

- Identify the cloud infrastructure service provider

- Document the SLAs, communication plan, maintenance plan, environment refresh plan

- Document the data security plan

- Document high level test strategy, testing release cycles, testing types, volume of data processed by Hadoop, third party tools

- Infosys Research Papers, 2013