Much has been said and written about NSA and its charter etc. This post is not about any of that. I will focus entirely on the a new tool that NSA has developed to meet its needs. Recently Paul Burkhardt, Chris Waring presented a talk titled, "An NSA Big Graph experiment" at Carnegie Mellon University. Following is from the presentation.

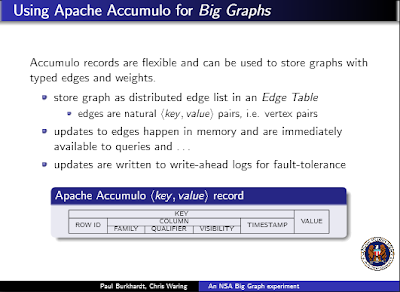

Couple more slides detailing Accumulo-

Problem Statement- How to handle graphing needs for big data?

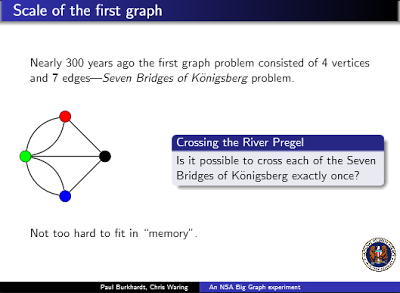

Growth of Graphing Over Time - The following 5 slides tell a good story and need no help from me.Solution - Development and release of new Graph Store Tool - Accumulo

- Accumulo was tested on a 1200 node cluster and over a petabyte of data with 70 trillion edges. (Massive scalability)

- Linear performance from 1 trillion to 70 trillion edges. What this means is that as an organization wants to store more data, it simply just needs to add more commodity hardware to maintain performance. (Easy horizontal scaling)

Couple more slides detailing Accumulo-

Accumulo highlights:

- similar to HBase

- can plug into Hadoop cluster

- it is more scalable than MongoDB, HBase and Cassandra

- supports cell-level security (no surprises here considering NSA developed it)

- supports server-side programming mechanism called Iterators,which can be used for real time analytics